Feature Services

Feature Services are sets of features which are exposed as an API. This API can be used for batch lookups of feature values (e.g. generating training datasets or feature dataframes for batch prediction), or low-latency requests for individual feature vectors.

Feature Services reference a set of features from Feature Views. Feature Views must be created before they can be served in a Feature Service, since Feature Services are only a consumption layer on top of existing features.

It is generally recommended that each model deployed in production have one associated Feature Service deployed, which serves features to the model.

A Feature Service provides:

- A REST API to access feature values at the time of prediction

- A one-line method call to rapidly construct training data for user-specified timestamps and labels

- The ability to observe the endpoint where the data is served to monitor serving throughput, latency, and prediction success rate

Defining a Feature Service

Define a Feature Service using the FeatureService class.

Attributes

A Feature Service definition includes the following attributes:

name: The unique name of the Feature Servicefeatures: The features defined in a Feature View or Feature Table, and served by the Feature Serviceonline_serving_enabled: Whether online serving is enabled for this Feature Service (defaults to True.)- Metadata used to organize the FeatureService. Metadata parameters include

description,owner,family, andtags.

Example: Defining a Feature Service

The following example defines a Feature Service.

from tecton import FeatureService, FeaturesConfig

from feature_repo.shared.features.ad_ground_truth_ctr_performance_7_days import ad_ground_truth_ctr_performance_7_days

from feature_repo.shared.features.user_total_ad_frequency_counts import user_total_ad_frequency_counts

from feature_repo.shared.features.user_ad_impression_counts import user_ad_impression_counts

ctr_prediction_service = FeatureService(

name='ctr_prediction_service',

description='A Feature Service used for supporting a CTR prediction model.',

online_serving_enabled=True,

features=[

# add all of the features in a Feature View

user_total_ad_frequency_counts,

# add a single feature from a Feature View using double-bracket notation

user_ad_impression_counts[["count"]]

],

family='ad_serving',

tags={'release': 'production'},

owner="matt@tecton.ai",

)

- The Feature Service uses the

user_total_ad_frequency_counts, anduser_ad_impression_countsFeature Views. - The list of features in the Feature Service are defined in the

featuresargument. When you pass a FeatureView in this argument, the Feature Service will contain all the features in the Feature View. To select a subset of features in a Feature View, use double-bracket notation (e.g.FeatureView[['my_feature', 'other_feature']].)

Using Feature Services

Using the low-latency REST API Interface

See the Fetching Online Features guide.

Using the Offline Feature Retrieval SDK Interface

Use the offline or batch interface for batch prediction jobs or to generate training datasets. To fetch a dataframe from a Feature Service with the Python SDK as a client, use the FeatureService.get_historical_features() method.

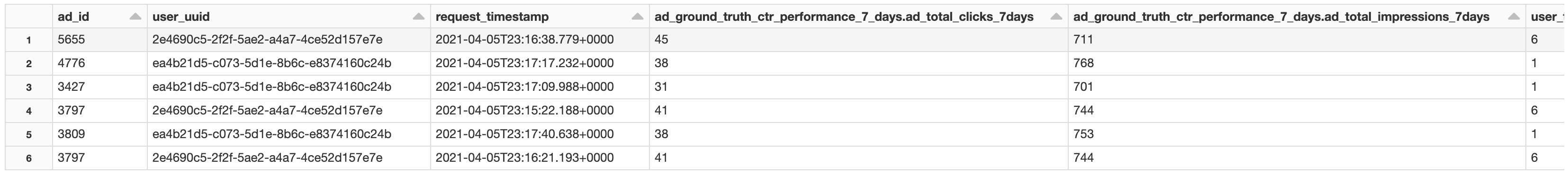

To make a batch request, first create a context consisting of the join keys for prediction and the desired feature timestamps. Then, pass these events to the Feature Service method get_historical_features().

events = spark.read.parquet('dbfs:/sample_events.pq')

display(events)

Tecton then generates the feature values.

import tecton

feature_service = tecton.get_feature_service("price_prediction_feature_service")

result_spark_df = feature_service.get_historical_features(events).to_spark()

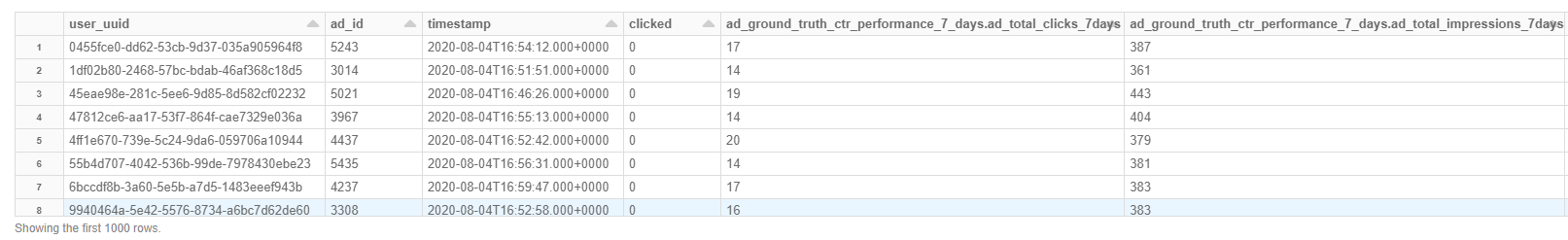

Using Feature Logging

Feature Services have the ability to continuously log online requests and feature vector responses as Tecton Datasets. These logged feature datasets can be used for auditing, analysis, training dataset generation, and spine creation.

To enable feature logging on a FeatureService, simply add a LoggingConfig like in the example below and optionally specify a sample rate. You can also optionally set log_effective_times=True to log the feature timestamps from the Feature Store. As a reminder, Tecton will always serve the latest stored feature values as of the time of the request.

Run tecton apply to apply your changes.

from tecton import LoggingConfig

ctr_prediction_service = FeatureService(

name='ctr_prediction_service',

features=[

ad_ground_truth_ctr_performance_7_days,

user_total_ad_frequency_counts

],

logging=LoggingConfig(

sample_rate=0.5,

log_effective_times=False,

)

)

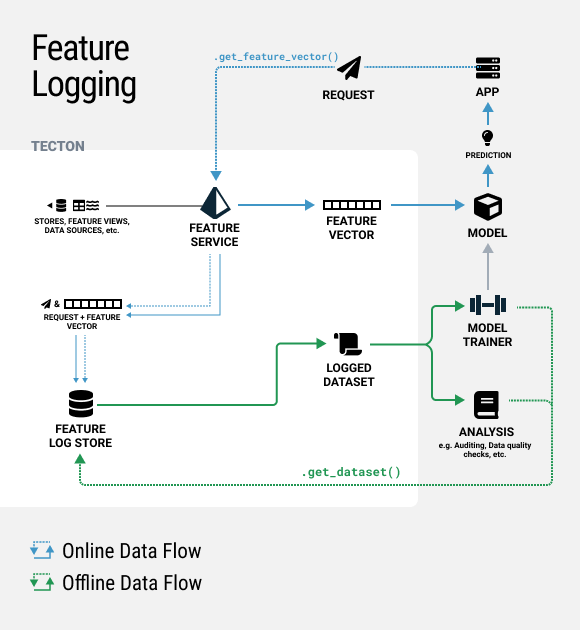

This will create a new Tecton Dataset under the Datasets tab in the Web UI. This dataset will continue having new feature logs appended to it every 30 mins. If the features in the Feature Service change, a new dataset version will be created.

This dataset can be fetched in a notebook using the code snippet below.

Important

AWS EMR users will need to follow instructions for installing Avro libraries notebooks to use Tecton Datasets since features are logged using Avro format.

import tecton

dataset = tecton.get_dataset('ctr_prediction_service.logged_requests.4')

display(dataset.to_spark())